Data parsing has become a vital tool for most growing companies. With the help of parsers, it is possible to obtain all the significant insights out of the massive amounts of data that are produced every day. Without use of data parsers, a lot of information that generated today will be lost and will not make any use to anyone. In this article, we will delve into parsing theory and discuss what to choose between custom and ready-made solutions.

What Is a Data Parser For?

To better understand how you can use data parsers, we need to answer the question – “What is data parsing?”. In primary terms, a parser can be characterized as the instrument that takes information from one data format and converts it into another. A parsing data definition becomes more clear if you look at a parser as a tool for harvesting and structuring huge portions of information at a time. Parsers can be written in different programming languages and can use a big set of libraries to maintain work.

In most cases parsers tend to be set up for work with HTML type of information only. Proper working parser can tell apart types of information needed, collect them and convert in a new comfortable to use format. Depending on the settings, the parser can extract most of the popular data types on the internet.

To understand data parsing meaning, we also need to refer to data harvesting and proxies for web scraping. Information that was extracted by a web scraper later goes to a parser for further interpretation. With the help of data parsers, you can get a comfortable read and process set of information that was extracted from a web page. More than this, parsing data meaning also includes the ability to convert information they get to different formats. For example, on the exit, you can get a JSON or CSV file from an HTML page.

Building Your Own Data Parser

Now, understanding what does parsing data mean, we can talk about possible solutions. For some use cases and situations it can be better to build the parser yourself. Company tech team can write a parser for a specific set of tasks or just adopt the solutions that are already in the market.

Building your own tool for parsing can be a good choice if you are trying to solve a unique task and need a tool designed specifically for this. That means – in the long run – a custom solution will be cheaper than other options on the market. Plus, you will have full control over development, product and result string.

On the other hand, the start cost of development and maintaining parser work can be significant. If you use your own development team, work on parser cuts a big part of their time, limiting abilities to work over other projects. If the budget for testing and development won’t cover all the steps, there probably will be bugs and problems with parsers performance. It is also important to remember that most of the parsing and scraping tasks should be powered up with residential or datacenter proxies. Without it, you can face parsing errors and the overall process can be significantly slowed down.

Creating a parsing data solution from scratch definitely has benefits, especially for specific kinds of tasks that can be done with other alternatives. However, you need to keep in mind that development will cut a big piece of your budget and time. Also, you need an already skilled development team to accomplish any notable results.

HTML Parsing Libraries

HTML based parsing libraries can be a great solution for adding a support of automation into your parsing or scraping solution. You can also consider using residential proxies with all of these setups for better geo-targeting and separating data flows. Most of the HTML libraries from below can be connected to your solution with help of an API.

BeautifulSoup keeps its place as the one of the most widely used libraries for parsing in Python language. With a beautiful soup scraping project, you will be able to extract data from the HTML and XML type of files. Beautiful Soup and Python can also provide a rich set of tools, like Scrapy, that were designed specifically for data parsing and scraping text.

In Javascript based projects, you can try to use a Cheerio library. It’s a good fit for data aggregation, with fast manipulating and good markup abilities in the end. Another tool to look for called Puppeteer. This instrument can help to make automatic screenshots or PDFs of a page you need. Later this data also can be marked up for comfortable use.

If you want to create your project with help of Java language look up for a JSoup library. With this set of instruments you will be able to operate HTML content and URL harvesting instruments. Also, JSoup can work great both in web scraping and web parsing tasks.

For Ruby you can refer to Nokogiri. This library will allow you to parse with HTML and use a set of APIs. With help of these tools you can extract information and transform it into a needed format faster.

Existing Data Parsing Tools

Usually, data parsers are tied up to use with web scraping libraries and tools. For some tasks, the full potential of the web scrapers can be too powerful and complicated. In this case, you can use open-source parsing libraries or other free solutions. Many of that program’s components are premade, so you can try to adapt it to your tasks. Also keep in mind that some of parsing tasks can require use of special types of proxies like proxy for coupon aggregators or proxy for local SEO. For example large data harvesting can be powered up with static residential proxies.

However, if you are looking to solve a number of simple parsing assignments, you can look to the side of ready-made commercial parsers that don’t require special programming skills from users. For example, Nanonets can provide you with tools for extracting and transforming data to needed formats. Also you can use AI & ML capabilities of this parser to make your task easier.

Another instrument that can be helpful called Import.io. This parsing tool can be utilized for data extraction from web pages or databases. Received data can be converted to CSV or Excel format.

For parsing mail related content, you can use a Mailparser tool. This parsing solution allows you to interact with data from emails related to most of the popular email providers. Also, you can parse attachments, like images or PDF files.

Should I Buy a Data Parser?

In some cases, it would be wise to consider buying a ready-made parser solution. If you are looking for rapidly deployable instruments or just want to try overall parsing abilities, prebuild setup can be your choice. Also, by buying a ready-made parser, you can save some money in a short time period. You don’t need to plan the expenses for developing a team and parser maintenance.

Also, most of the common issues in the process can be solved way faster with the turnkey solution. Parsers of this kind are also less likely to face a crash or other major problems during the work.

Using a ready-made parser will definitely save your resources and time if you are looking to solve a common task. However, this choice also has a couple of downsides. In long term use, this solution will cost you more than custom development. Plus, you probably won’t have that much of a control over a process with a bought parser.

In the end, it all comes to your desired use case. For large scale companies, it is wiser to spend some resources over to have a perfect fit solution for a long time. But, for smaller businesses, often a comes to buying a ready-made solution to deal with tasks right away to gain weight on market.

Benefits of Data Parsing

Data parsers can be useful in many ways and in many industries. First of all, data parsing can save a significant amount of money and other resources if you are looking for a tool for automating repetitive parsing tasks. Plus, data parsers can present the obtained information in a convenient format, so you can process and use the information faster.

Flexibility in formats also brings flexibility to other use cases for this information. With a ready to use set of documents on your hand you can send it to analyze or store for further use. Process of parsing also helps structuring data by getting rid of everything that is unrelated to your request. This way data comes to you not only structured, but in much higher quality.

Another benefit of data parser lies in their ability to transform data from different sources to a single output. This way you can connect big amounts of data to transfer it further to your use. When a company operates a mass of information, this solution can be especially helpful to to avoid unstructured data flow.

In some cases, companies can store the vast majority of data that is kept in outdated formats. Data parsing can solve this problem by finding needed information and bringing it to a comfortable to use look. Parsing tools can fastly process the entire array of data and make it usable for today’s standards. This is especially beneficial when you you combine parsing with proxy for real estate or proxies for reputation intelligence.

Problems When Parsing Data From Websites

Dealing with data through the parsers is not an easy task by default. In the process of parsing data from a website, you can face several common obstacles. First of the troubles lies in work with errors and false data. Information that comes to input of parsing in most cases contains inaccuracies and raw material.

Issues like this are most often found when parsing webpage data in HTML format. Modern browsers tend to make corrections and work even with pages that contain an HTML code that brings errors and misprints to syntax. For parsing a site like this, you should be able to automatically interpret and mark mistakes like this.

When facing a question – “How to extract data from a website using parsing and reading?”, one of the main obstacles will be related to large amounts of data that parsers need to process. Parsing and scraping takes time and resources to do tasks accurately. When facing big and extensive loads, parser can start showing performance issues or even crushes. This can be partly overcomed by paralleling the parsing process to several inputs. But, this also brings high resource usage and server loads. In this case, you can use a datacenter rotating proxies to cope even with a sharply growing server load. More than this, you can look at the user agents for scraping, to avoid being blocked.

By separating inputs, you can also force the parser to work with several data formats simultaneously. But, to make this possible, your parser should be constantly updated and improved. Data formats are evolving fast and your parsing solution should keep up with this development in order to keep up-to-date.

Challenges in Data Parsing

Managing massive amounts of data is a harsh process, and parsing the data is no exception to this rule. Data parser and other data collection tools inevitably face a number of obstacles in the process. Let’s look at several main problems that you will probably face while performing any solid parsing task.

In the vast majority of cases, you will have to work with raw data without proper structure and other elements that facilitate the process. These problems, in turn, will lead to a number of errors, unreadable elements, and inconsistencies in the final results. HTML based documents will suffer from this problem the most. This happens because of the modern technologies and functions built into browsers. They will render HTML in the correct format, even if the raw syntax has a bunch of mistakes. Combined, all of these nuances will lead to unclosed tags, inconsistencies, and overall unreadable HTML content. To overcome this type of trouble, you need to implement special intelligent parser tools to automatically correct these mistakes.

Also, you can be faced with the different formats of data in one source. So, your parser should have all the needed functionality to manage several data inputs and outputs at the same time. Formats of data are constantly evolving, so you need to keep an eye on your software and scrapers to update them in time. More than this, you need to look for a parser that will be able to import and export a number of different encodings for characters. This function will allow you to collect data on both MacOS and Windows systems.

You need to come to terms with the fact that parsing will take a large amount of time and processing power to perform. So, you also should be ready; that large data parsing project will definitely lead to issues with the performance of your system. In this case, it will be wise to split processes and use a parser in parallel to collect data from several documents at the same time. This trick will only cut the time limitations, but the pressure on your system resources will only rise. Overall, large-scale parsing tasks will always bring a set of problems that will require high class equipment and tools.

Why Is Parsing Data Important?

Data parsing can be considered as an important or even essential tool for lots of different business industries and processes. For example, email scraping is one of the most widely used and popular tasks for any parser. With most of the important information and communication focused on the email, the parsing of this information becomes an incredibly useful tool.

Businesses tend to use this feature to track and collect information from large samples of emails. With tools that can allow a parser to collect data from emails based on keywords or needed commands, businesses can find exact information in any amount of data. This sphere of work can also benefit from a proxy for price monitoring or an SEO proxy.

Any parser will also be irreplaceable for looking through a large number of resumes. Recruiters can receive hundreds, if not thousands, of resumes for each vacancy. Parser can help highlight needed candidates based on keywords or needed previous experience. HR specialists can target specific skills, certifications, or talents.

In the same manner, a parser will be a good tool for working with investments. You can manage information from several sources at the same time. Parser can track keywords in large amounts of data to bring you all the needed highlights. This is especially useful, when you practice day trading and every second can cost you money. Overall, parser can save time and power while you focus on analysis and other more important tasks.The parsing of data can find use in other analysis based spheres. For example, market analysis can benefit the automated tracking of several competitors on the market. Parser can keep an eye on all of the important data sources at the same time. With constantly changing markets, this implementation of a parser can become crucial for positioning and identifying market patterns. With lots of customers around the world, parser will be one of the most universal and comfortable to use tools when it comes to aggregating this amount of data. Even in smaller markets, a parser can become the source of statistics and other analytics data. More than this, you can combine your parser with a proxy for travel fare aggregation or recruitment proxy to get the best results in any kind of task.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What is data parsing?

Data parsing can be described as the process of data transformation from one format to another. Parser used for operating and structuring huge amounts of data.

-

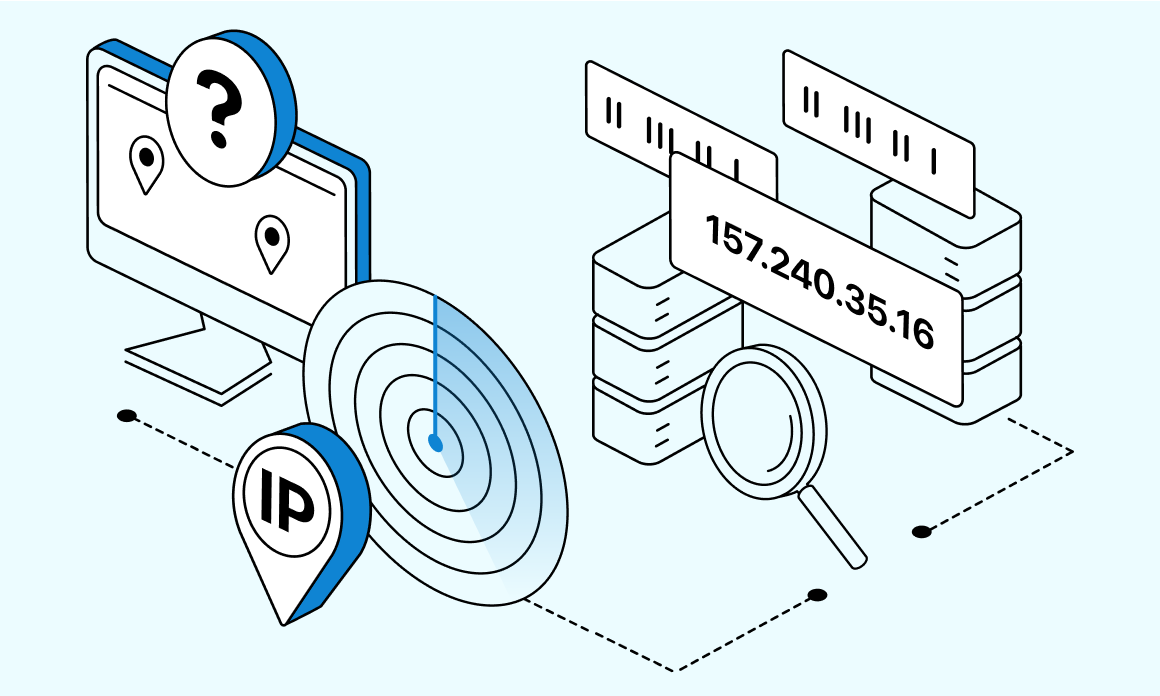

What proxy is the best choice for parsing and scraping data?

Depending on the task you can choose a different set of proxies. For example, if you need to process a lot of data at one time the datacenter proxies can be a good fit. But, if you need to target specific locations and harvest data depending on that, you can look at residential proxy options.

-

Which is better to use your own data parser or an existing solution?

Using your own parser can be a good choice if you need a precise tool with detailed control. But, solutions like this will come at the cost. On the other hand, if you need to make a couple tasks done, or you simply don't need all the power of custom setup - a ready-made solution will be your choice.

Top 5 posts

MAP monitoring is one of the essential tools for today's E-commerce activities. By scraping data online, companies can keep an eye on all sorts of information about their product for different tasks. In this article, we will see what benefits businesses can expect from using MAP monitoring.