The Internet can give you almost limitless amounts of data and sources for any kind of research. To gather any data efficiently, you can learn web scraping with Beautiful Soup techniques. In this article, we will introduce you to Python libraries and see what tools can be used for this job. In our beginner web scraping Beautiful Soup Python guide, you can learn and take a closer look at steps for creating your own parsing solution.

What is Web Scraping?

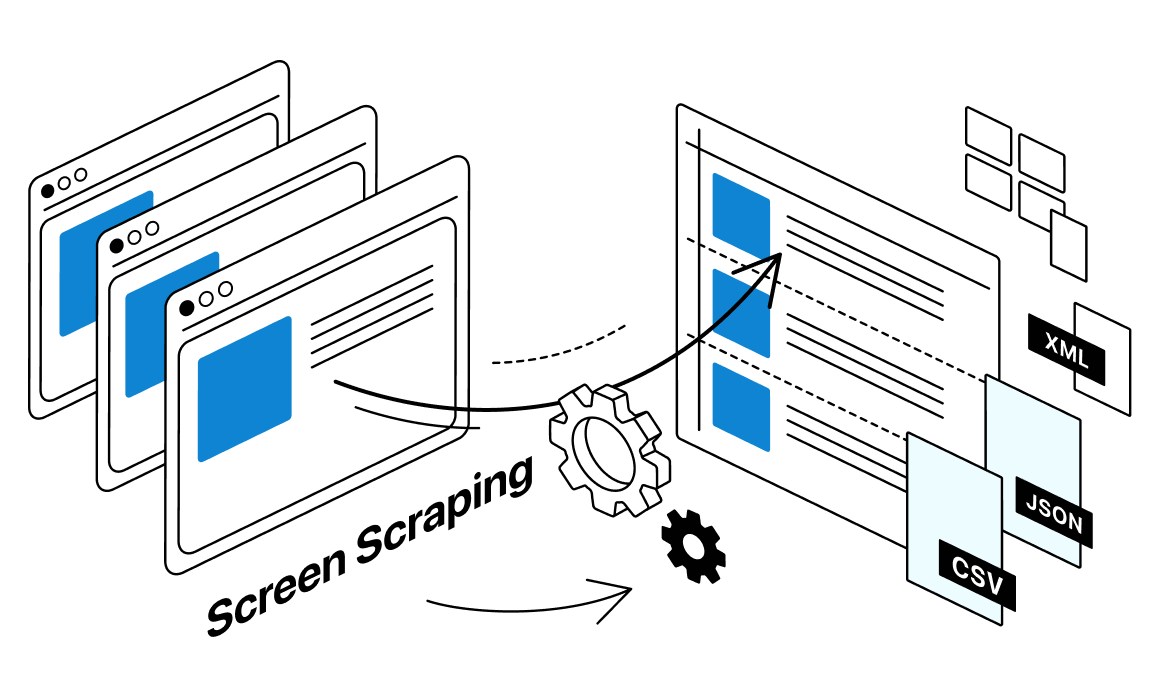

Web scraping can be described as the operation of harvesting data from the sites and pages on the Internet. This procedure can be done manually If you will look at sites you are interested in and copy some data. But, in most cases, scraping implies automation and collecting data without any human presence. It is important to note that you can harvest only publicly available data. But, even in this case, some sites have additional restrictions that can block the work of the scrapers.

In most cases, if you approach this process with care and don’t break any rules, you won’t face problems. Ultimately, you should check what rules apply to information on each exact site you are looking to scrape. It is extra important if you are looking to start any large project in this field.

You can scrape Google or any other site for needed information with special instruments designed specifically for this job, like SEO proxies or other tools. Most of the web scrapers work in the same manner. First thing you need to provide is one or more URLs of pages that you are interested in. After this, the program will load the HTML content from resources you mentioned and extract data. Then this data later will be converted into a comfortable format to work with.

Nowadays, web scraping can be used in a lot of tasks. For example, scraping has become an everyday tool for web developers, eCommerce, scientists, and journalists. For this task, you can use proxies for local SEO or recruitment proxies.

All this work requires collecting large scale data in short periods of time. To make this task easier, you can consider using proxies for web scraping and buy residential proxies. This way will be able to handle big projects with geo-targeting in core.

Collected information later can be present as a file that can easily be analyzed and used in further work. Web scraping also can serve as a tool for automating or creating search bots and price tracking apps. Scraper, paired with proxies for coupon aggregators or proxies for price monitoring can provide lots of valuable data.

Web Parsing in Python

For a long time, Python was considered nearly the most famous programming language for web scraping projects. Well-designed libraries like BeautifulSoup or Selenium makes it easy for even a newbie to start their own project and try their skills in the data harvesting field.

Python is often referred to as an easy language to read and write because of its syntax that is close to an English language. Python can also be a good choice for data science, AI and machine learning projects.

Or you can try to apply a mechanism called Anaconda and get easy access to a variety of libraries for different tasks. Later in this article, we will go into more details about libraries in a Python for web scraping tutorial using Beautiful Soup.

More than this, Python easily works with other tools. For example, you can use a set of static residential proxies, for targeting different locations in your data collecting projects. Or you can try to use a mechanism for Python called Anaconda and get easy access to a number of libraries for different tasks. Later in this article, we will go into deep details about libraries in a Python web scraping tutorial using Beautiful Soup.

Data Source Analysis

At the start of your data harvesting project, you should take a closer look into sites and pages you want to scrape. This step should always be first in any scraping related task. Initially, look through the main pages of the site and decide what information you need to obtain and in what order. It is good practice to click through different pages and menus to understand what kind of information you can expect to get.

Pay additional attention to the URL of the different pages. This line of symbols can contain a lot of useful data on its own. Some of the URLs can be separated into two main parts. In this case, the first part will contain data about page functions and the second about page location on the site. More than this, URLs also can contain data about your requests to the site. This data is usually located at the end of the URL.

On the next step, you should explore the structure and architecture of each page you want to scrape. This step is especially important because you need to find exactly what elements to scrape further. You can start working with site structures with the help of developer tools. All browsers today have this function preinstalled. In Chrome, you need to go through menus of View – Developer – Developer Tools.

These options will provide you with the ability to study any site elements. For example, try to choose any interesting part of HTML code and see what site fragments are connected to it. Later, all this content can be processed further by using Beautiful Soup for text scraping.

Collect Content From the Page

With understanding of page structure and with a prospect of data you want to scrape, you can finally start information harvesting. In the first instance, you need to import HTML code you want to scrape into a Python project. In these tasks, you can use a requests library.

Start with creating a new environment and installing all needed packages. Now create a new file in the text editor and import the URL you want to scrape, specify a page and a print request command. Then you can try to print the .text part to start collecting HTML code as it was presented in previous steps. After this, you should have all the needed HTML code on your hands.

This method is useful only with sites that utilize static HTML content. Sites of this type can provide you with all the HTML that users can see on the page. This way, any further work with code will become much easier. You can also make harvested data more easy to read by using an HTML formatter. This tool can make code readable completely automatically. Plus, you can try to scrape multiple sites at the same time with the help of private proxy solutions.

Parsing HTML Code With Beautiful Soup

Now with all needed HTML code on hand, you can start further work with it. Gathered code probably looks like a gorge of the fragments that don’t correlate with each other. But, this code can be easily parsed into a useful and comfortable to use document with a Beautiful Soup web scraping tool.

To better understand how to use Beautiful Soup in Python for web scraping, we can say that this library is created specifically to parse a set of structured information. Scraping data using Beautiful Soup means that you are able to use and watch any HTML content the same way you did in the browser. This library provides a simple and intuitive way of working and looking through elements of HTML.

Installing Libraries

To start your web scraping Beautiful Soup tutorial, we need to download a library through the terminal with install commands. After this, try to import the installed library in your existing project. To start using Beautiful Soup for web scraping, create an object for this library by typing an “import requests” command.

From this point you can start scraping data with Beautiful Soup. Paste commands that will take content that you gather earlier as the input for further work and create a new object for the library. Also, try to use a “html.parser” command to accurately set the right data parsing tool for your HTML content.

HTML Extraction

Probably the most interesting thing about Beautiful Soup web scraping is connected to features of its design. With this library on hand, you can create both a Soup object and a parser in one time.

On this step, try to print soup.prettify() command. This will allow you to see and use the whole tree of the HTML code you collected. With this, you should start to explore code for information you need to parse first. Pick one part of the code and proceed to work only with this element.

Extracting Content From HTML

When you find all the needed parts of the code, you can begin extracting useful content from them and convert it in another form. After you find all needed objects, add a .text line to return only text without any other parts of the code.

Methods of this kind can be used to gather data from all the HTML code you collected before. Some parts of the code can be extracted with small errors, like white spaces between the lines. These troubles can be overcome with help of text cleaning methods. In the end, you should have all the targeted information in text format that can be converted into other formats like xml or simple text files.

Conclusion

Python language can provide a lot of useful tools to work on your data harvesting projects. Requests library can guarantee you an easy way to extract static HTML code from any website you want. And the Beautiful Soup can help further with parsing your collected data. These two instruments can easily fulfill most of your data collecting needs. More than this, you can look at the special proxies for travel fare aggregation or proxies for real estate.In this guide, you learned how to deal with web scraping using Python and Beautiful Soup. For powering up any of your projects also consider using a set of datacenter proxy to scrape large amounts of data and don’t worry about performance issues.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What is Web Scraping?

Collection of different, publicly available data from websites and web pages is called web scraping. This technique is often used by companies to gather different information about their products or ad campaigns.

-

What proxies are the best for web scraping tasks?

Depending on your specific case, you might want to use different sets of proxies. For example, you can scrape a lot of data at the same time and use a datacenter rotating proxy for this. With their help, you can keep maximum performance over a long time.

-

Why use Python for scraping data?

Python is fairly simple to use and learn programming languages that have libraries designed specifically for web scraping tasks. With the help of these tools, even beginners can harvest data from different sources.

Top 5 posts

If you are trying to access geo-restricted or blocked sites, the first thing you might find is sites that offer fake IP addresses or free proxies to use. In some cases, these tools can help you with your tasks, but in exchange you will put your personal data at risk. In this article, we will discuss the risks of fake IP addresses and reasons why not use free proxies.