The volume of information that is available through the internet is constantly growing. Businesses always try to find new ways of collecting and analyzing big data. Nowadays, web scraping has become the most crucial and popular tool and practice for tasks that involve online data collection.

Competition in the market is built largely around data analysis and information tracking. Without modern web scraping techniques, almost all of this won’t be possible to perform. In the following paragraphs, we’ll learn how to perform web scraping with Ruby and what tricks to use in this process.

Ruby Web Scraping

Ruby is a language that is quite popular and has open-source code. It supports procedural, functional, and many other kinds of development. Ruby was designed to be a language that was simple to read and manipulate intuitively. Due to all these features, Ruby is just as popular a language for scraping and other activities as Python, Java, or tools like Scrapy paired with rotating or regular proxies.

Ruby web scraping, similar to any other data aggregation process, can help you assemble needed information about products, markets, and others. This data can be used for various marketing, HR, or SEO business purposes. For example, you can collect job posting site data from sources like LinkedIn, Reddit, or even Twitter.

Ruby may serve as an excellent option for your scraping project when you’re interested in automating your gathering of information from various websites like Amazon, BestBuy, eBay or even Shopee. Ruby can help scrape sites with the poorest navigation or arrangements. With a web scraper on Ruby and a set of datacenter proxies, you’re able to gather information from any website and get protection from bans and restrictions.

Ruby scraping also has a number of useful extensions, called gems. For instance, you may utilize Nokogiri, an open URI, for the extraction of data from a website. So, Nokogiri is a gem that’s able to help you collect documents and look through the HTML file content. Open-URI can help to open and see through web files directly. For example, this can be useful for scraping Zillow, Bing, DuckDuckGo, or other search engines. All of this, along with static residential proxies or other IP-changing tools like rotating proxies, can be a good setup for scraping of any complexity. In the end, Ruby can be described as an almost perfect language for all data collection-related tasks.

Best Ruby Web Scraping Gems

Any Ruby web scraper may utilize a variety of libraries and frameworks. For Ruby, they are called gems and can be used just as regular libraries in other languages. When web scraping, you can use libraries that were specifically designed for downloading website content, HTML analysis, and other tasks.

For example, you can utilize the Net::HTTP library to perform all the needed HTTP requests. However, it might not offer the greatest support for working with syntax, so beginners need to keep their eyes wide open while working with this gem. If the Net::HTTP does not meet your expectations, try to use an HTTPParty tool. This is another client for using HTTP that can help you import requests for scraping.

So, if we need to scrape an e-commerce website (Amazon) or Google SERP, the following guide will be relevant:

require ‘net/http’

require ‘net/https’

require ‘nokogiri’

require ‘cgi’

require ‘uri’

# Prompt the user for a URL

print ‘Enter text: ‘ # This prints the message “Enter text: ” to the console, prompting the user to input some text

query = CGI.escape(gets.chomp)

# This line does the following:

# 1. gets.chomp takes the user input from the console (terminated by the Enter key) and removes the trailing newline character using chomp.

# 2. CGI.escape is a method from the CGI module in Ruby that replaces special characters in a string with their respective HTML or URL-encoded equivalents. It’s commonly used to encode parameters for URLs.

# 3. The escaped input text is then assigned to the variable query.

# Set the target URL with the query parameter

url = URI(“https://www.google.com/search?q=#{query}&hl=en”)

# URI is a module in Ruby that provides classes to handle Uniform Resource Identifiers, which include URLs.

# 1. The argument to URI is a string that represents the complete URL. In this case, it’s “https://www.google.com/search?q=#{query}&hl=en”.

# 2. The “#{query}” within the URL is a form of string interpolation in Ruby. The value of the query variable, which was obtained from user input and escaped earlier, will be substituted into this position in the URL.

# 3. The &hl=en is a query parameter indicating the desired language (English in this case).

# Define the headers to mimic a real browser request

headers = {

‘User-Agent’ => ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/120.0.0.0 Safari/537.36’

}

# ‘User-Agent’ is the name of an HTTP header. The User-Agent header is used to identify the client making the request to the server.

# The value assigned to ‘User-Agent’ is a string that represents the user agent information. In this case, it simulates the user agent of a web browser running on a Windows 10 64-bit system, using Chrome version 120.0.0.0.

# Specify your proxy information

proxy_address = ‘http://login:password@ip:port’ # This line assigns a string to the variable proxy_address, representing the URL of a proxy server. In this case, it includes the username (login), password (password), IP address (ip), and port (port). The format is http://username:password@ip:port.

proxy_uri = URI.parse(proxy_address) # This line parses the proxy_address string into a URI object, which is a representation of a Uniform Resource Identifier. The URI object can then be used to extract different components of the URI, such as the scheme, host, user information, port, etc.

# If you want to use HTTPS for the Google search request, set the scheme to ‘https’

proxy_uri.scheme = ‘http’ # Change to ‘https’ if you want to use HTTPS

# Create an HTTP proxy object

proxy = Net::HTTP::Proxy(proxy_uri.host, proxy_uri.port, proxy_uri.user, proxy_uri.password) # This line of code creates a Net::HTTP::Proxy object named proxy. It’s using the Net::HTTP::Proxy class to configure an HTTP connection through a proxy server.

# Net::HTTP::Proxy is a class that extends Net::HTTP to support proxy configurations.

# proxy_uri.host: This extracts the host (IP address or domain) from the parsed proxy URI.

# proxy_uri.port: This extracts the port number from the parsed proxy URI.

# proxy_uri.user: This extracts the username from the parsed proxy URI.

# proxy_uri.password: This extracts the password from the parsed proxy URI.

# Create an HTTP object and make the request

http = proxy.new(url.host, url.port)

http.use_ssl = true

response = http.get(url, headers)

# This code establishes an HTTP connection using the Net::HTTP class, sets it to use SSL (Secure Socket Layer) for encrypted communication, and then sends a GET request to the specified URL with the provided headers. The response from the server is stored in the response variable.

# Parse the response body with Nokogiri

doc = Nokogiri::HTML(response.body)

# This line of code uses the Nokogiri gem to parse the HTML content of the response body obtained from an HTTP request. It creates a Nokogiri document object named doc that represents the structured HTML document. This document object can be queried and manipulated using Nokogiri’s methods.

if response.code.to_i != 200 # If the status code is not 200, then returns an error message

abort(“Bad request or proxy: #{response.code}”)

end

# Output status code and body length

puts ‘—–response—–‘

puts “Status code: #{response.code}”

puts “Text length: #{response.body.length} bytes”

# Find the first title and URL block

first_block = doc.css(‘div.yuRUbf’)[0] # This selects the first HTML element with the class ‘yuRUbf’ inside the parsed document and assigns it to the variable first_block.

first_title = first_block.css(‘h3.LC20lb’).text # This selects the text content of the first HTML <h3> element with the class ‘LC20lb’ inside the previously selected first_block and assigns it to the variable first_title.

first_url = first_block.css(‘a’)[0][‘href’] # This selects the value of the ‘href’ attribute of the first HTML <a> (anchor) element inside the first_block and assigns it to the variable first_url. This typically represents the URL associated with the search result.

# Adjust the URL if necessary

first_url = “https://google.com#{first_url}” if first_url.start_with?(“/url?”)

# first_url.start_with?(“/url?”): This checks if first_url starts with the string “/url?”. If this condition is true, it means that the URL is in the format commonly used by Google search result links.

# “https://google.com#{first_url}”: If the condition is true, it concatenates “https://google.com” with first_url. This effectively transforms a Google search result link into a complete URL.

# Output the first title and URL

puts “Title: #{first_title}”

puts “Link: #{first_url}”

Also bring your attention to the Nokogiri tools for flexible HTML and XML parsing through the API. This way, you can easily extract any needed data from HTML and XML documents.

Another option is to examine the Mechanize library for work with headless browser functions with a high-level API. This tool can help you automate all the needed work and connections with pages online. Mechanize can also store and operate cookies, manage redirects, open links, and even submit forms on sites. More than this, the tool provides history functionality, so you will be able to track visited sites. Plus, headless browsers, along with residential proxies, can be a good solution for evading blocks, restrictions, and captchas in the scraping process.

The last framework that we will talk about is called Selenium. This tool keeps its position among the others that are most commonly used for performing automated actions on web pages with Ruby scraper. The Selenium library can give browsers instructions on how to use the target website like a real user. Tools like this are one of the main tools for performing quality and large-scale data collection tasks.

Conclusion

One of the most widely used and effective methods for gathering and harvesting data from the internet is web scraping. This technology allows you not just to gather data but also to organize, process, and store this information.

Scraping can be performed with many different tools, languages, and frameworks. Essentially, the first major challenge you must conquer is making the appropriate tool selection. Depending on your tasks, you might need to use special sets of tools, libraries, or even use proxies with ScrapeBox or a proxy for scraping in your project.

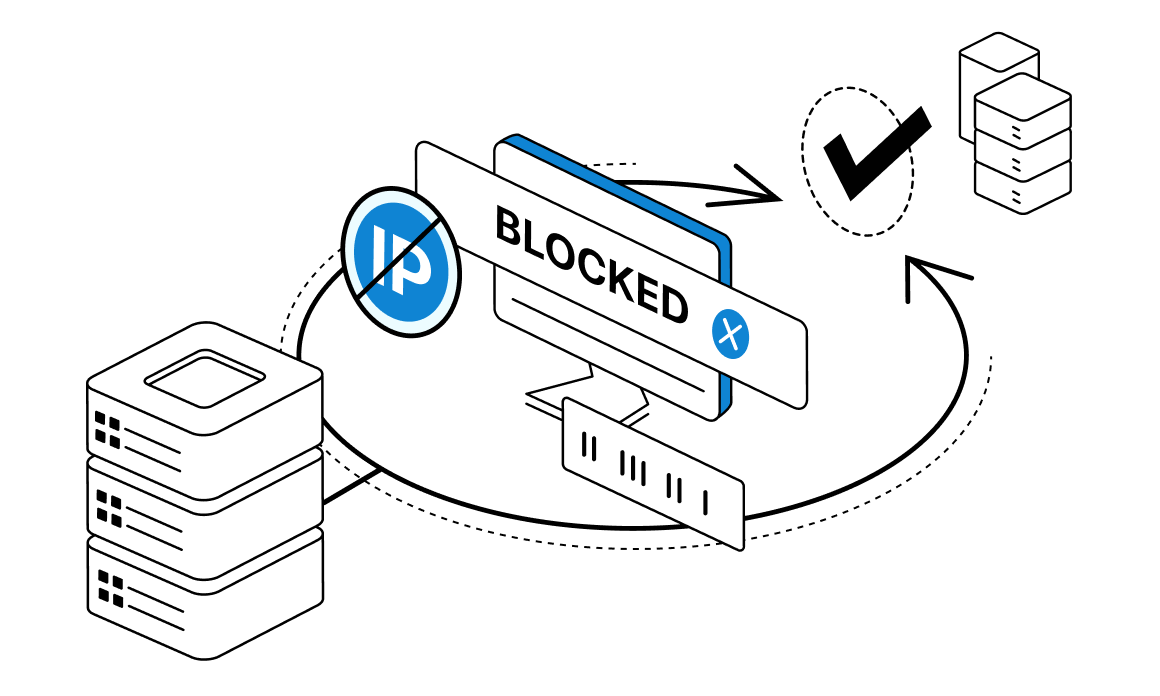

As we learned in this article, the Ruby language can be a good solution to power up your scraping tasks. And datacenter rotating proxies or other IP-changing tools can be a good solution for any problems in the field of blocks and restrictions from sites on any step.This language is among the most widely used for scraping due to its abundance of ready-made, simple-to-implement libraries. With all these features, you can just choose the libraries you need and utilize them to create your own solution that will take into account all your wishes for data collection. This way, you can use web scraping to generate leads, perform SEO tasks, or scraping Amazon.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What is Ruby web scraping?

Ruby web scraping can be described as online data collection with the help of the Ruby programming language. Along with Java and Python, Ruby can be called one of the most popular choices for scraping tasks.

-

What kind of proxies are the best to use with Ruby web scraping?

Residential proxies can be an ultimate and universal tool for any scraping project. This type of proxy can guarantee a block-free experience on all fronts.

-

Why use Ruby for data harvesting?

Ruby is a universal and easy-to-use language. With a large set of scraping libraries, you can build a scraper according to your request in a short time.

Top 5 posts

If you are not quite familiar with the concept of IP bans, you are lucky! But since you are reading this, the chances are that you experienced the frustration from interrupted scraping sessions on social media, search engines or e-commerce sites in the past. In this Guide we have put together some of the most frequent reasons for getting your access banned by the IP and set forth measures for overriding and avoiding such ‘traps’.