Web scraping nowadays has become one of the basic parts of lots of business-related and professional tasks. Data aggregation, machine learning, and many other different fields of work depend on scraping tools.

The majority of scraping solutions and software also heavily rely on scraping proxy servers. This technology is crucial for avoiding blocks, restrictions, captchas, or other kinds of limitations in the process. In the following paragraphs, we’ll discuss proxy servers for scraping and look at all the main nuances of this topic.

What Is Web Scraping?

Scraping can be described as the method for gathering and harvesting different data online. In a lot of cases, you must utilize a certain kind of browser and the HTTP document of the targeted page to start collecting data.

You may try to gather and work on the information manually, but the market can provide an extensive range of instruments designed specifically for this task. Scraping software can collect needed URLs and parse data from them individually. Later, that data can be transformed into a comfortable-to-use table.

This technology is widely used in lots of different professional fields, like SEO, marketing, and HR. With web-scanning tools, companies can work on significant amounts of data to stay competitive. For example, organizations can constantly track prices, social media, the press, and basically any Internet page for needed information.

Overall, scraping with Java or other tools is a complicated procedure that includes multiple components, and proxy scraping servers tend to rank among the most important. Without proxies, pages will ban any data harvesting activities from the start.

What Is a Proxy Server?

A proxy server is a computer that reroutes your Internet connections and serves as the entry point for your web requests. Therefore, if you use proxies for web scraping software, the server will receive your request before it gets to the site.

This technology can be helpful in many cases, both for work-related and personal tasks. For instance, you can utilize geo-restricted pages and sites, increase your security, or just mask your regular IP. Furthermore, proxies can help with data collection, connection routing, firewall setup, and many more professional tasks.

As said before, modern scraping software and solutions are highly dependent on the quality of the web scraping proxy. Without these instruments, you’ll constantly face blocks and restrictions from sites because they will mark your activity as automated or bot-related. To elaborate on this topic, let’s learn which types of proxies you are able to employ for your tasks.

Proxy Types

Nowadays, proxy for scraping operate on two primary protocols: HTTP and SOCKS. For scraping, the distinction between the two won’t be significant. Although HTTP is more popular and is utilized by more apps for scraping, SOCKS might be a little bit faster in some situations. In the end, which of these protocols you choose should largely depend on the scraping tools you plan to use.

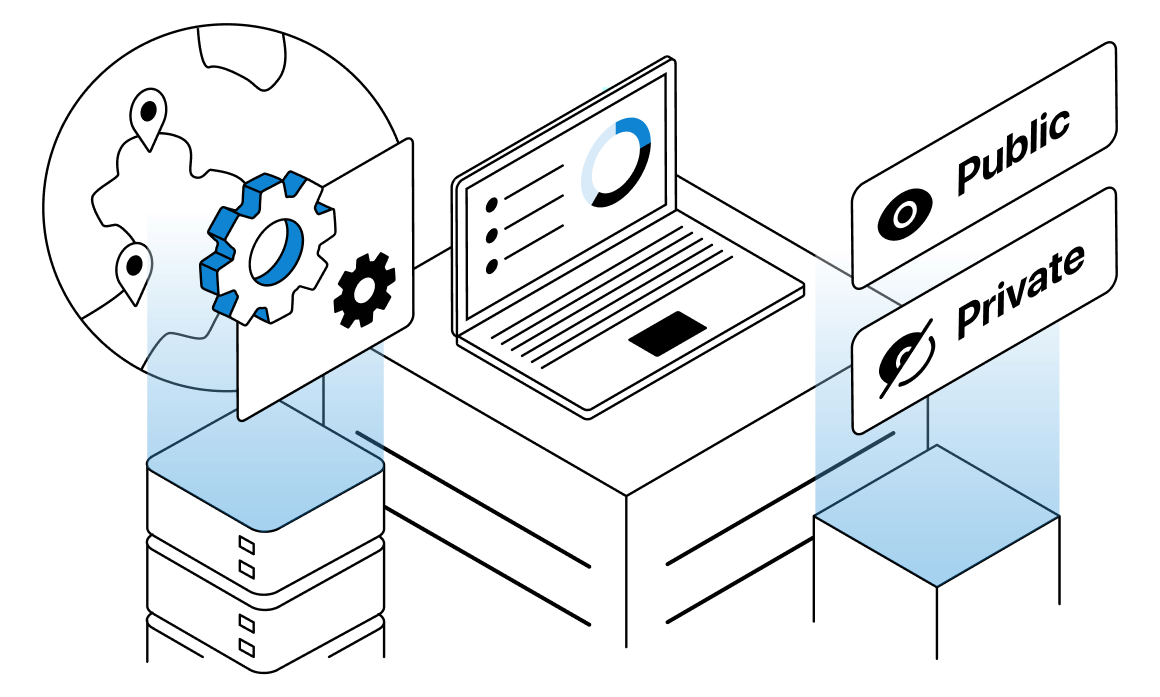

So, what is a scraping proxy in general? There are four primary types of proxies: datacenter, static residential, residential, and mobile. They all differ in prices, stealth, and overall reliability. Further, we’ll talk about how various types of proxies show themselves in scraping tasks.

First, let’s talk about datacenter proxies. The main feature here is non-affiliation with any of the Internet providers. All IPs in this case are assigned by commercial servers. IPs from these proxies may also be split between several users. In the case of data harvesting, this may serve as a trigger for blocks, but overall, the result will be different in each case. For example, you can use this type to collect data from job posting sites like LinkedIn, major social networks like Reddit or Twitter, or spaces like Zillow.

On the contrary, datacenter and datacenter rotating proxies are a more cheap, reliable, and preferred option for a wide variety of purposes. When you consider your options for rotating datacenter proxies, most of the risks in the field of blocks will be eliminated.

The next category is called residential proxies. As the name states, an IP in this case will be assigned by the provider of the Internet. This eliminates most of the dangers in the field of blocks. Basically, with these proxies, you can expect IPs that will look almost identical to those of regular clients. This type can prove worth in most of the regular tasks that can include scraping major retail chains like Amazon, Shopee, BestBuy, and eBay.

But, this usually comes at a cost because residential proxies are regularly more expensive to serve. This data scraping proxy is also capable of changing its IP over time. Scraping tasks usually don’t require a sudden change of IP, so this may prove helpful in certain conditions and be a deal-breaker in others.

Ultimately, it is better to have both of these types in your toolbox. You can also use static residential proxies to eliminate any unnecessary IP changes in the process of scraping. You can use this resdential proxy or other tools to avoid IP address blocking and maintain connectivity for as long as is necessary. For example, this type can show solid results in scraping search engine tasks like collecting data from Google, Bing, or DuckDuckGo.

The last type is called mobile proxies, which are correlated with mobile devices such as tablets or smartphones. IPs from proxies like this will also belong to real devices. Although they can still be less expensive, these proxies tend to be less reliable than standard residential ones.

Moreover, proxies can be separated into public and private ones. The public type is regularly accessible to anyone for free, and a server can be used by several people simultaneously. This kind of proxy is not suitable to practice web scraping tasks.

This can even be considered illegal scraping through proxy, because you can’t control what other server users do. In the end, if the server was used for some kind of illegal activity, you could also be involved. The second type, called a private proxy, eliminates this possibility. With a private proxy, you will use servers alone, without any interference from other users. Reading and operating data in this case will be accessible only to you.

Managing Proxy Pool

You can use this guide to begin handling a proxy for web scraping pool. First, check the ban settings so proxies can recognize different kinds of limitations and automatically avoid them. This way, you can face not only direct blocks but redirects or captchas that must be avoided as quickly as possible.

You can use different proxies to retry your requests if you run into blocks. Also, try to utilize throttling and randomization to make your connections harder to track. Also, monitor the sites that require all connections made from one IP. If you use other IPs, the site will log you out. Some websites may additionally need an IP that belongs to specific regions or locations. You must use a proxy from a pool of IP addresses that are associated with a specific location in this situation.

How Many Proxies You Need for Web Scraping

To determine the approximate number of proxies needed to finish the project, use this formula. The quantity of needed requests divided by the total number of requests per proxy server is the number of proxy servers needed for your project. This way, you should use 100 servers to work simultaneously if you need to process 1000 requests in a second and your servers can only process 10 requests each.

The total number of possible requests may also be affected by the URLs you select for data collection. This parameter also determines the possible crawling frequency. For instance, some websites allow a specific quantity of requests to be submitted in a minute by each user.

How to Test Proxies for Web Scraping

Proxies for web scraping tests ought to involve three main categories: speed, security, and reliability. Let’s talk about all of these parameters in detail. The speed of proxies is one of the main parameters to keep an eye on. Slow proxies can cause timeouts, false requests, or overall delays. You can measure proxy speeds with different sets of URLs by utilizing scraper built-in functions or special services API created for testing.

Then you can investigate the security side of proxies. Your data should be kept private and secure all the way to the end. Special proxy testing services are also able to assist you here. They may rate a proxy based on the SSL certificate data. Of course, you also need to ensure that when you scrape website through proxy, your servers will always function reliably in order to avoid timeouts or offline periods.

When you start web scraping using a proxy, try to utilize libraries like Scrapy, Beautiful Soup, and Selenium for testing. All of these tools have built-in functions for testing that can be used with rotating proxies or any other type of servers. In this manner, you can walk around and diagnose all of the primary proxy parameters simultaneously.

Conclusion

In general, proxy technology is essential for many online activities, including data collection. We covered every major proxy type and protocol in this article and looked at each of them from a scraping perspective.

With the right software and knowledge from this text, you can find proxy servers that will fill all of your needs, both for scraping with Ruby and other tasks. Plus, you can try random sets of proxies and compare them for your exact tasks to know for sure which one will be the best.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

Why is web scraping so popular?

Web scraping has become one of the main methods for collecting and managing large amounts of data. This technology is frequently used in the SEO, marketing, and HR fields of work.

-

What kind of proxies will be best for your web scraping tasks?

You can use different types of proxies depending on your exact case. Residential proxies can be an ultimate choice because of their good compatibility and high potential for being undetected while scraping.

-

Why does web scraping software need to use proxies?

Web scraping needs to use proxies because of the restrictions and limitations that sites implement for scraping. With proxies, you can collect all of the needed information and stay secure during the process.

Top 5 posts

Online multiplayer gaming has become a massive phenomenon, with millions of passionate gamers worldwide spending hours battling friends and foes. However, public IP addresses can cause problems for even the most devoted gamers. A residential proxy service provides an easy solution to get the best online gaming experience while keeping your identity hidden and securing your connection. This guide will explore how residential proxies work, the advantages they offer gamers, and how to set one up for your online games. Whether you want to avoid IP bans, reduce lag, or stay anonymous, a residential proxy for online gaming can take your online gaming to the next level.