Web scraping nowadays has become an essential tool for lots of different organizations and businesses. Modern competition on the market is built around data harvesting and analysis. Web scraping can also be useful for SEO, HR, and marketing tasks in almost any business.

Web scraping is a complex process during which many factors need to be taken into account. In the following paragraphs, we’ll learn what tricks to use and how to manage a proxy to scrape Google.

Why is Scraping Important for Your Business?

It’s not news that Google is the main provider of a variety of services for both regular users, businesses, and even corporations. This makes Google the richest resource for data scraping material. Prices, market statistics, and other crucial data can be extracted from Google-owned services.

For example, you can use Google or other browsers like DuckDuckGo scraping to monitor your competitors or for different kinds of market analysis. Or you can generate new leads and market areas for your future growth.

What Can be Scraped on Google?

The first thing that comes to mind when thinking about Google is the search engine, or Chrome browser. However, Google has a wide range of services for all the different online tasks that you or your business can use.

With Google Scholar, you can search for articles on almost all of the popular subjects that you can imagine. The same way, Google Places will give you information about all of the organizations in the area that were registered with this service.

A Google patent search can provide you with information about current patents acting worldwide. Google Images can also provide an extensive base of scraping material for any of your purposes. Google Videos is another service with a wide range of content to collect for your project. In the same manner, you can utilize Google Shopping, News, Trends, and Flights.

All of these services can provide you with actual and updated data in lots of different spheres. With Google Services alone, you can build the needed data set in almost any category.

What Are the Problems With Scraping Google Sites?

Google, like any other resource, does not encourage data scraping. So in some cases, you can face different kinds of restrictions on your connection or data aggregation processes.

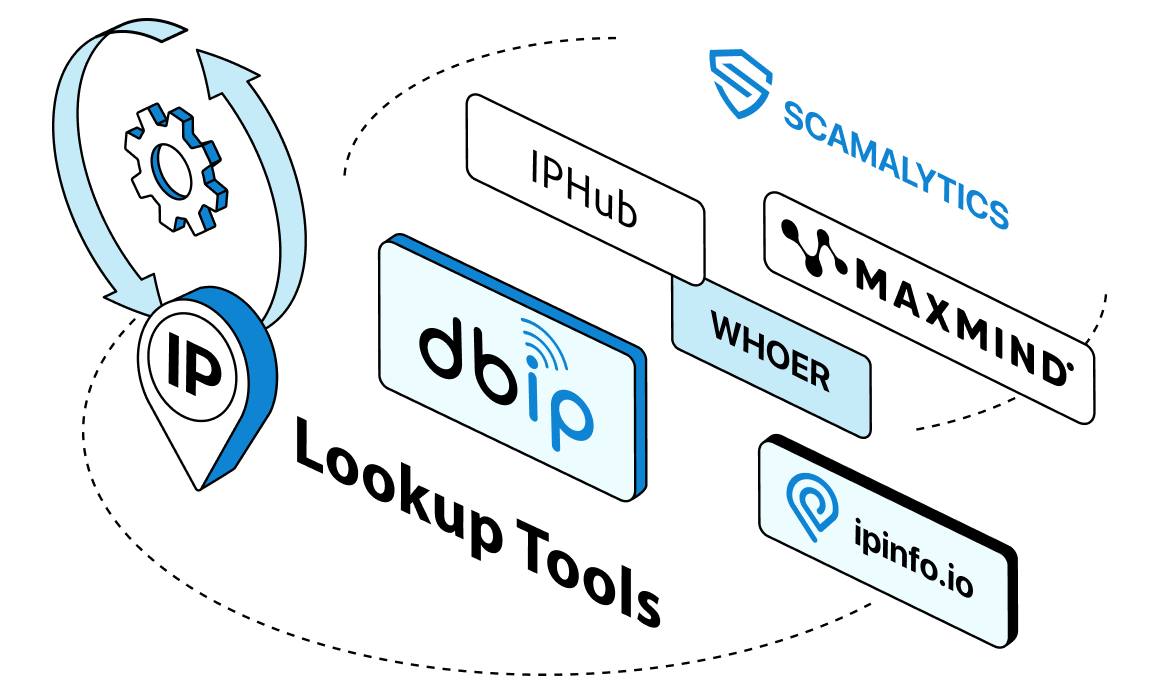

Initially, you may come across IP blocks and further IP tracking. In this situation, Google just restrains your access to services from a flagged IP address. This can happen when you send multiple requests to the website from a single IP simultaneously. This usually leads to associating your device with bot activity. There is also a limit on almost all of the sites. When you use up all of that data, you can face automatic IP address blocking.

Another kind of problem lies in obtaining geo-restricted content. Google or some of the services can be banned in your country. Plus, users themselves can restrict your access to the content they post. Under these circumstances, you need to use static residential proxies to choose the country where none of these restrictions will actually work. You can use proxies with ScrapeBox in the same way to collect any needed data.

The last obstacle is related to the Google Captchas. Most websites use them to fight bot activity nowadays. If your activity or the speed of your requests look suspicious to the site, you will be forced to solve captchas.

What Are Some Ways to Overcome the Barriers to Scraping on Google?

Web scraping tasks can confront you with a lot of obstacles, especially when you are trying to collect information from large services like Google. Let’s see what tricks you can use to avoid some of the problems in the process.

First of all, try to use rotation technology for your IPs. With datacenter rotating proxies or other tools with rotation like Ruby libraries, you can hide your system from anti-bot software. If you send lots of requests from one single IP, be ready to face restrictions or bans. Also, this will decrease the chance of encountering any captchas.

If you face the captcha anyway, you must employ specialized services to solve it. In short, you may employ manual solving methods or special software aimed at this purpose. If you are planning on launching a large-scale scraping project, this method will be crucial to maintaining the required speeds.

Counterintuitively, you can reduce the speed at which you scrape data from the site and add additional intervals for your requests. Modern bots and proxies for web scraping can maintain rapid speeds and gather a great deal of information. Websites are able to track and block such activity, plus lots of requests can make the response from the site even slower. This is why you need to artificially reduce the scraping rate and make responses with pauses between them.

Also, you can use user agent-changing techniques. Usually, websites can examine the information about your system that the user agent contains. So changing the user agent for your connections directly results in the chances of being flagged and blocked. And again, you can use a custom-made pool of premade user agents or utilize special software and API for user agent generation.

In the same way, you can utilize a headless browser to increase scraping speed. Some Google Services sites use extensions and content that can seriously slow down or interrupt the data collection process. In this case, you need to start using a headless browser that will allow you to harvest information without any GUI and download additional content from the page.

Finally, you use a proxy to scrape Google cache. This method can also be useful for avoiding bans and restrictions in the process. When working with cache, your scraper will make a request only for a copy of the needed information. To put it differently, gathering the data does not require direct access to the website in this case.

The Best Proxies for Google Scraping Without Being Blocked

To understand what kind of proxy for Google will be best for scraping, we need to say a few words about each of them. These days, the most common proxy types can be split into two main categories: residential proxies and datacenter proxies.

Datacenter proxies can be a good choice for projects that involve fast and speed related tasks. These proxies for scraping software can be an excellent option if you need to stream massive volumes of data or analyze data flow directly. However, these proxies can present significant challenges when it comes to Google scraping. IP related to big data centers can be tracked more easily, so it is more likely that your server will be flagged. In this scenario, you may employ datacenter rotating proxies to get a new IP for each new connection.

Alternatively, you may investigate the residential proxy option. In accordance with the name, these servers get their IP addresses from a regular ISP. This way, sites will see every connection you have as regular ones from your basic PC. Residential proxies can also provide a more flexible choice of location for your server. In the end, Google scraping can be done with either type of proxy. Overall, the residential type will be slightly more flexible and universal in use.

Some Tips

Here is a list of some tricks and rules that you can use for your future scraping projects, both for Google, Bing, and other services. First of all, you need to try and avoid free proxy options. Free proxies, in most cases, will serve as a direct threat to your private information. Plus, this proxy is usually shared between several users, so the chances of your IP being flagged are very high.

Also, in your scraping projects, try to set the rate limit for your servers. This technique can help reduce suspicions from Google in any of the use cases. You just need to set each proxy from your pool to activate in an interval of 3 to 6 seconds. This can protect you from additional wariness from the Google side.

The last rule concerns the captcha field. Google uses them to guard services from harmful bot activity. So before banning your access, Google will send you a captcha to solve. This can be one of the signs to change the server for further scraping.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

Why might you want to scrape Google?

Google has lots of different services that cover most of the popular searching needs. So, with Google scraping, you can find almost any needed data for your project.

-

What kind of proxy is best for scraping Google?

Residential proxies can be an ultimate choice for most of your scraping projects. But you can also look at the datacenter options if you need a more powerful solution for your tasks.

-

What data can you collect from Google?

Google can provide you with all kinds of articles, marketing information, images, and videos. With the right crawler, you can find almost any type of information.

Top 5 posts

Amazon keeps its position as the largest e-commerce company in the world. According to rough estimates, almost half of all scraping is targeted at Amazon pages. In the global market, Amazon is the number one site for scraping.