The internet harbors vast oceans of data, but accessing these insights requires decoding the web’s inner workings. Web scraping provides the keys to unravel HTML, CSS and JavaScript, translating raw code into understandable information.

Scrapers act as translators – taking the web’s jumbled mass of HTML, CSS, and JavaScript and converting it into structured datasets ready for interpretation. With the right tools, analysts can parse meaning from the web’s endless maze of public pages. Web scraping brings logic to the internet’s chaos.

The basics of web data extraction

Tapping the true potential of the internet requires looking beyond the surface. Web scrapers dive beneath the UI, exploring the underlying code to discover value within.

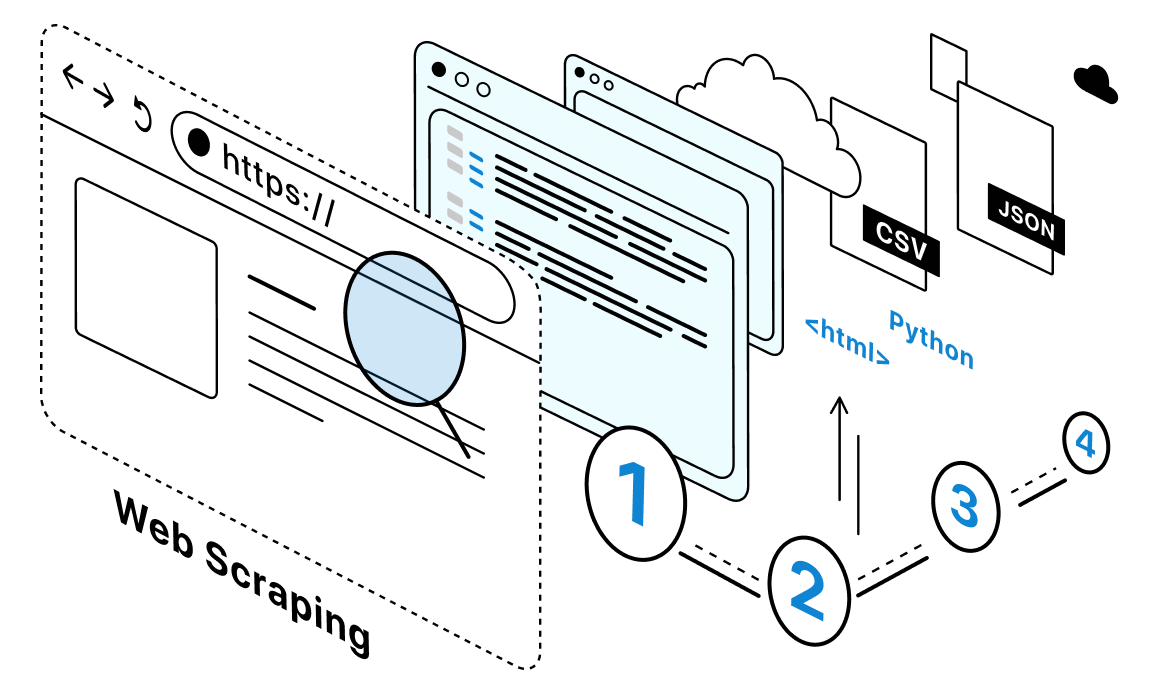

Here’s how to perform an organized collection of data from a website:

- Identifying the target site URL and pages.

- Writing a scraper using Python libraries like Beautiful Soup, or using a no-code extraction tool.

- The scraper extracts the required data points from the raw HTML.

- Data is formatted and exported as CSV, JSON, or other structured formats.

Keep in mind that any scarping-related tasks will highly likely require you to use a rotating proxy or other set of anonymizing tools.

Web scraping use cases

Many businesses rely on web scraping to power key functions:

- Price monitoring – Scrape prices from competitor sites for dynamic pricing. The main sources for this kind of scraping usually lie in Amazon, Shopee, BestBuy, Ebay.

- Lead generation – Build lead lists by scraping contact information from directories.

- Market research – Analyze trends from data points gathered across the web. For example, you can perform Linked scraping or generally collect data about job postings on other sites. Market research is also applicable for rent-related businesses. For this sphere of work, you can look at Zillow scraping,

- Content aggregation – Scrape news sites, blogs, etc. to curate content. Here you can look at scraping Twitter or other main news sources.

- Data for machine learning – Web data powers AI behind search, translation, recommendations, and more. In this manner, scraping Reddit or other main sources of user-generated content can be the main source of new information for AI-learning. Mashine learning can also highly benefit from large-scale projects that include search engines like Bing, DuckDuckGo, or even Google.

Scraping data from a website allows one to gather high-quality data sets to generate insights and drive growth.

The web scraping process

The scraping process involves:

- Configuring the scraper with target sites and required data points.

- The scraper extracts raw HTML from pages.

- Parsing the HTML to identify and extract relevant data using tags, classes, IDs etc.

- Storing extracted data in structured formats like CSV, JSON etc.

Scrapers can also render Javascript, handle cookies and sessions, extract media, paginate through sites, and more.

Is scraping the web illegal?

Web scraping public data is generally legal. However, aggressively scraping sites against their Terms of Service, using extracted data commercially without permission, scraping behind paywalls or logins etc. may violate laws or regulations.

It’s best to check a site’s ToS and robots.txt file to understand if and how scraping can be done. Using proxies and throttling requests helps avoid over-scraping issues.

Ways to scrape a website

- Python libraries like BeautifulSoup, Scrapy and other related tools require coding skills but work on any site.

- Browser extensions like Bardeen simplify ad-hoc scraping from the browser.

- Cloud scraping APIs like ScraperAPI are easy to implement and handle proxies, browsers etc.

- Ruby and tools that work on this language can also provide a wide set of options for quality data collection.

- No-code tools like Octoparse, ParseHub etc. allow visual scraping configuration without coding.

- Commercial services like Zyte offer managed scraping at scale.

The best approach depends on the use case, skill level and volume of data needed. With some learning, anyone can perform data aggregation on the web for insights.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What are some tips for effective web scraping?

Some tips include using proxies and random delays to avoid bans, inspecting pages to identify optimal selectors, testing scrapers incrementally, using APIs/feeds if available, and checking robots.txt and terms of service. Well-configured scrapers and not overloading sites is key.

-

What are the legal risks of web scraping?

Key legal risks include violating copyright, terms of service, data protection laws, and hacking/computer fraud laws in some cases. Only scrape public data in a non-disruptive way and don't repurpose data without permission. Consult an expert for your use case.

-

Can I scrape data behind a login or paywall?

Scraping restricted data typically violates terms of service. However, it may be possible with additional techniques like reverse engineering APIs, mimicking browser sessions, or gaining access through legal subscription. Proceed with caution in these areas.

Top 5 posts

Browser.lol is a handy solution for a variety of modern online problems. The main browser function lets you operate it without requiring any installation or file access on your system. You don't need to download Browser.lol, because this software will work with all of your already-installed browsers. This way, you're able to access a virtual browser environment and open any link with no concern about getting exposed to viruses or malware (enhanced by a static ip proxy).