Most people refer to Reddit as the Internet’s front page. Over the years, this site has built a monthly audience of more than 400 million users. For the time being, Reddit has more than 100 thousand different communities. All of these achievements make Reddit a perfect site for scraping different data online. In the following paragraphs, we’ll learn what the best tactics are for web scraping Reddit and what tricks to use in this process.

Why Do You Need to Scrape Reddit?

With this large audience spread by interests among certain groups, you can easily find and gather useful data on specific topics. For example, you can track your products or brand mentions in site discussions and collect all opinions. The same way, you can track customer problems or questions and answer them directly in a short period of time.

Another large scope of application involves tracking developing trends and markets in general. Reddit is usually a place where the most popular trends start, so you can take advantage of this. Additionally, you can monitor news and content pertaining to the relevant subjects. Plus, web scraping with Node.JS or other tools lets you gather data about posts, likes, comments, and other user content. The same way you can scrape other major sources of user-generated media like Twitter or some sites with specific area of work. For example, LinkedIn or other job posting sites.

What Data Can You Get From Reddit?

You’re able to scrape different parts of the website in order to obtain the maximum amount of data that is required and stay legal while scraping. For instance, you can gather all the necessary posts and community data from subreddits.

You can also look at the Reddit posts made by one user and harvest post titles, comments, vote numbers, and other data. From comments alone, you can track the time of the comment, point, usernames, and URLs. You can download user histories and comments in this manner.

In the same way, you’re able to locate any needed information using a key word or a URL and gather all the information related to this subject. Basically, you are able to use Reddit data scraping for the harvesting of almost all parts of the site. Remember that laws pertaining to personal information may protect some of the information. Before performing actual web scraping and parsing errors, it is best to double-check all the legal details that can interfere with your current project.

New Reddit API Policy

Reddit provides its own API for interaction with the web page. In certain circumstances, this may be an excellent alternative to using the web scraper. However, let’s see what details you should keep in mind while using an API.

First of all, Reddit will force you to use authentication to scrape and extract any data with the API. Any commercial use of the API will also require an additional authorization from Reddit.

APIs also come with the additional risk of bans because Reddit gives access to an API only through the token that you can get after registration. This is also inconvenient since Reddit has its own set of rules that regulate the use of the API in different scenarios.

Plus, you should remember that in summer 2023, the use of the Reddit API stopped being free. Lots of users opposed these changes, but Reddit has implied them on the platform. This tendency also shows that Reddit can enforce other rules that can affect your project in the future.

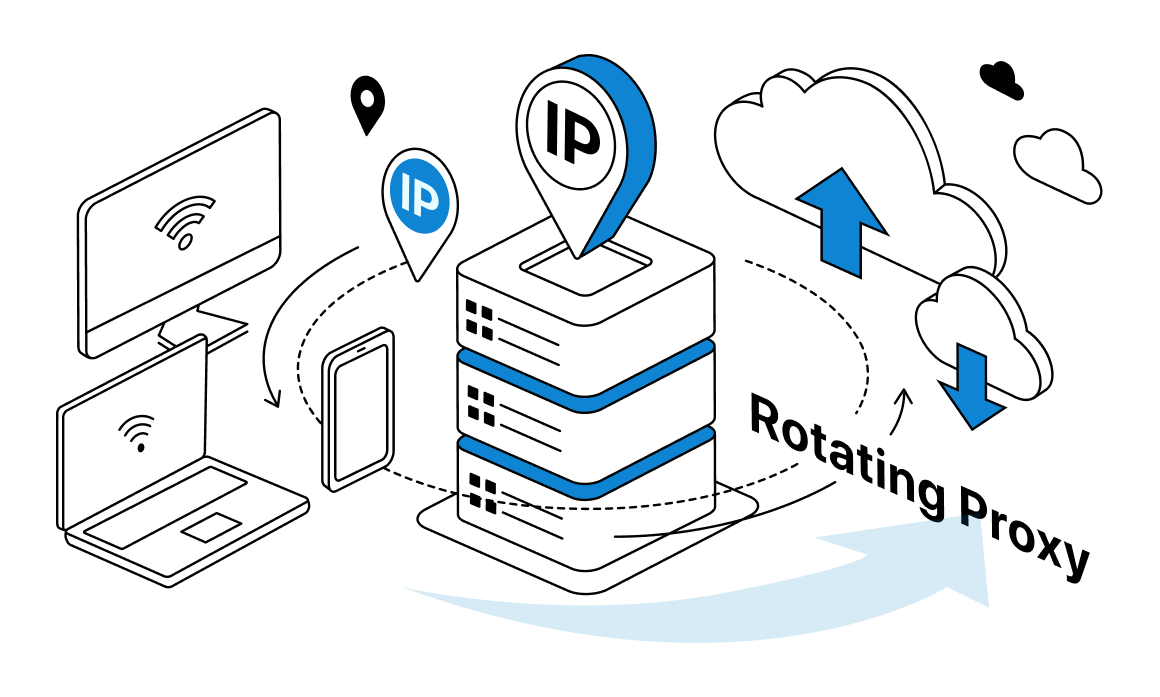

Considering all these nuances, web scraping can be a good alternative to API use. With the right proxy for scraping data from Reddit or for scraping data to Excel, you can gain all of the information you require and expand the possible amounts of data for harvesting. For instance, you may utilize datacenter rotating proxies or other kind of rotating proxies for best performance with first-class protection from any possible bans. You can also use this setup as a proxies for Scrapebox for superb performance in SEO tasks.

Reddit API vs Reddit Scraping

In the summer of 2023, Reddit began limiting potential requests to the API to 10 per minute. If you register and pass authentication, you can use up to 100 requests in a minute. Originally, Reddit planned to charge money for every query for all the API users. This happened because scraping Reddit has become common practice for training new versions of the ChatGPT.

As of now, subreddits are still accessible without the need for a login. In this manner, you’ll be able to gather all the needed data as before. Therefore, even with just datacenter proxies, you’re able to obtain all the information you want for your project.

Scraping Reddit With Selenium

Let’s see how to build a simple web scraping tool with Selenium. You can find alternative options for scraping with Ruby or other tools. First of all, you need to install the latest Python and Python IDE. Now you can create the needed folders and bases for future scrapers.

JavaScript plays a major role on Reddit because of its extreme interactivity. In this instance, we must make use of a tool that assists in rendering the required pages in a web browser environment. To perform the scraping of dynamic pages, we will use a Selenium tool. You must first install Selenium itself and import all the needed libraries.

As a default, Selenium will launch a fresh window with a GUI every time you start scraping. You can configure your browser to run in headless mode because this process can use a lot of resources. After this, we can proceed to the needed Reddit page.

To begin Reddit scraping, locate the required page’s URL and use Selenium to open it. Following this step, configure the web control for your browser. Make sure to maximize the browser window and add a URL for scraping. You can run your script through a test to see if the test message appears automatically.

In order to locate the necessary HTML attributes for scraping, you must also examine the required page with the devtools feature of your browser. You must first create a dictionary that will hold all the information you gather before you can begin scraping.

First, you can get information like the post creation date, the number of subreddit followers, and descriptions of the posts. To obtain the attributes that are needed in this instance, you must use the get_attribute() method. Keep in mind that you must import all of the gathered data into your existing dictionary.

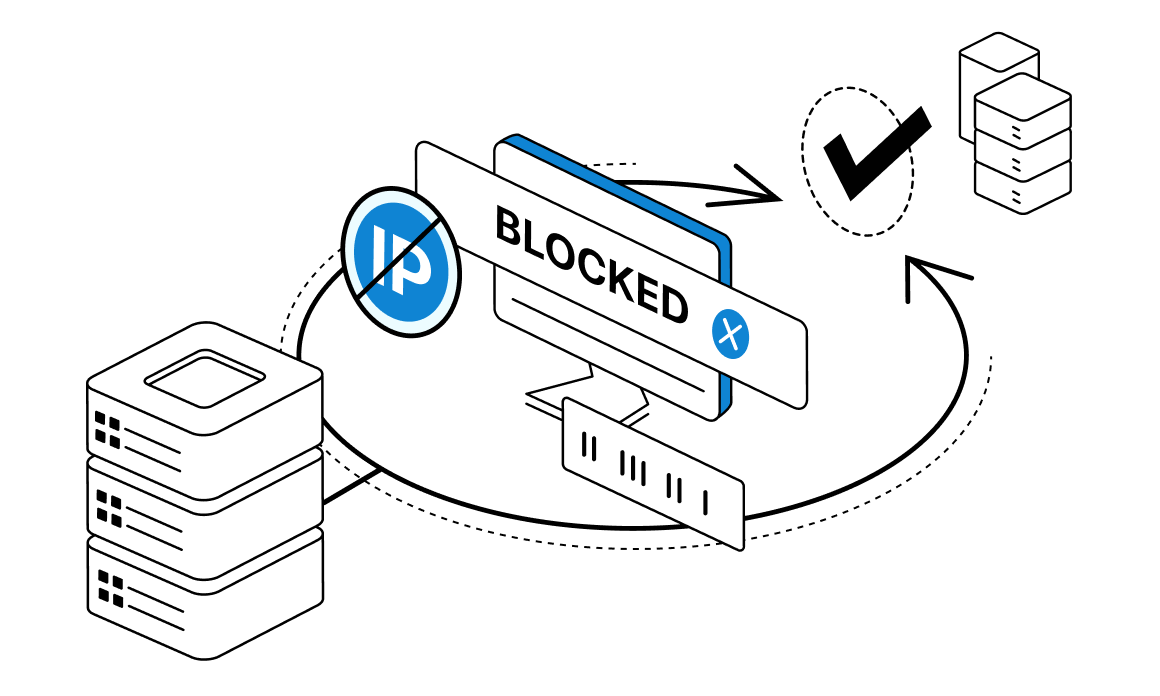

Remember that repeatedly and persistently requesting Reddit from a single IP address can result in a ban or other form of restriction. This can be prevented by using a dedicated proxy for Reddit, which will boost your success rate when scraping and shield your project from bans.

Now we can start to collect actual posts from the appropriate subreddit. Inspect the HTML code once again and locate the posts that are required to collect code for them all together. The same way, you can choose the upvote or comment section. This way, you’re able to choose all of the data required for your scraping.

Now we need to export this data into a JSON file. All of the data that you collected is stored in the Python dictionary. We can use the simple import command to export all of the harvested information into one comfortable to use file. Now you should have one structured file with all of the needed data scraped from the site.

This way, you’re able to gather as much data as you want. For large-scale projects, it’s possible to utilize residential proxies to increase the success rate. With the correct proxies for web scraping, you can quickly gather a large amount of data without running the risk of being blocked.

Conclusion

Web scraping has become a very useful method for data collection from Reddit thanks to new API policies. We covered the types of data that can be gathered and the advantages of using Reddit for data aggregation in this article. We also described the easy way to build your own web scraper for Reddit.

However, just like previous API policies, Reddit can recap changes and start using new anti-scraping rules and measures. In this case, you can try to power up your scraping solution with static residential proxies or another IP-changing solution. This way, even with stricter rules, you will be able to collect and harvest all the data you need.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What is Reddit web scraping?

Reddit web scraping can be described as the process of collecting data from any page on Reddit. This process usually requires special tools for scraping and proxy technologies.

-

What proxies are the best for web scraping Reddit?

Depending on your initial goals, different proxies can provide different benefits. Ultimately, you can try to use the most versatile residential proxies. They are equally good for scraping and anti-tracking protection.

-

What kind of information can you collect on Reddit?

With Reddit scraping, you can collect all the needed information about subreddits, posts, titles, comments, upvote counts, and other types of data.

Top 5 posts

Some people might wonder what kind of level of privacy they get when they use the Incognito mode settings on their browser (like Google Chrome, for instance). And whether this level is up to the standards. When you say browsing with Tor, is it the same as using proxies in terms of your personal data protection?