If you are ever thinking of parsing and updating tables from different sites, Excel can provide all the needed tools for this. Web Query tool can help you to create parsing tasks and receive results with all the information in a spreadsheet. In this article we will discuss the basics of the Web Query setup and use for your tasks and talk about how to do data scraping using Excel.

How Web Scraping Works in Excel

Excel app has a special built-in tool called Web Query that can help you parse information from websites. Web Query can be used coupled with Internet Explorer or other browsers to load and perform data scraping from website to Excel.

Web Query software can render sites and harvest data that you choose from any static HTML tables you can find. The built-in browser will mark tables that can be scraped when you will be looking through the site. After this, you can choose what tables to parse and even schedule the auto-update for them.

Using an Excel Web Query

From this point, you can start your practice of scraping data from website to Excel. This guide will cover only the basic setup for parsing data from one site. For starters, create a new file and locate the “Data” tab in the Excel settings.

Now you should see many variations of how you can extract information. Select one that says “From Web” and in the new window, type the address or paste the link of the wanted site. For starters, we recommend using sites that are specific for practicing data scraping to Excel.

In the newly opened window, you can find tables that you want to in an Excel file. All the tables that can be parsed will have a little yellow mark next to them. When you find a needed element for scraping, click on this mark and choose the option for importing to “Existing worksheet”.

Now you’ve processed the first web scraping data to Excel file and created the data table with needed information. With a setup like this, Excel will be sending requests to selected sites and scrape data from a site in your file.

Otherwise, you can consider using datacenter rotating proxies to power up any of your scraping projects. Proxy for scraping software can get you a stable connection with all needed power for scraping data to Excel.

Excel Web Query Advanced Options

Now, with ready to use Web Query on your hands, you can try to customize it more with help of Web Query Editor. To access this tool, click on the block with ready data in it, choose the Table and Edit Query. These commands will perform an automated refresh of tables. In some cases, if the table in the site was heavily changed, data can be parsed in a more unstructured way than before.

You can schedule further refreshes of the tables. First, choose the Connection Properties at the bottom of the list. This operation should open the menu, where you will be able to locate the Usage tab and schedule refresh there.

You can vary the time to your liking, but remember that Excel will be updating data only when the needed file is open. You can also consider using a set of static residential proxies to access any blocked or restricted sites in your area. We will discuss more advanced options for automatic update below in this article.

Parsing Web Data with Excel VBA

Excel has a built-in option for utilizing a programming language called Visual Basic for Applications or VBA. For this option in your sheets, you need to open the Developer tab and find the needed option there. Files with VBA code in them will save in a xlsm format instead of csv.

VBA in Excel works by operating the guides from the code. To start harvesting data with VBA in Excel, you need to build new scripts and macros commands that will be rotating requests to sites and receive data in return. In Windows, this operation can be done through the Win HTTP or Internet Explorer. To guarantee a full access to any needed site, consider using a residential proxies solution. This way, you can watch and scrape Google or any site, regardless of whether it is blocked or not.

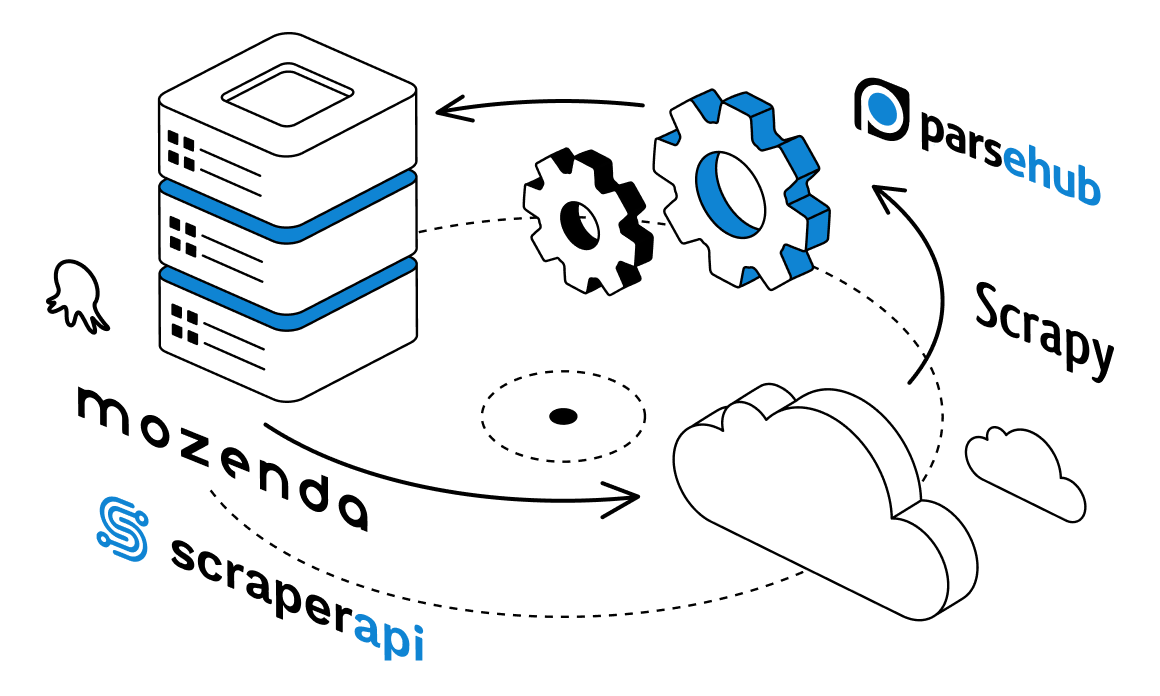

Parsing with the VBA is a much more complicated process that requires at least the basic knowledge in programming. But in the end, web scraping through VBA can be faster and more flexible for long term projects. Alternatively, you can try to use ready-made scraper solutions that use popular languages like Python or don’t require any coding at all.

How to Update Data – Automatic Update

Updates for data received through the Web Query can be done manually and automatically. In this guide, we will look into the automatic option. This way, Excel will start parsing data over and over with some periodicity. This way, your sheet can always stay up to date. You can also utilize a set of datacenter proxies for bypassing blocks and stable access to scrape Reddit or any other site.

Auto-refresh option can be enabled in a few easy steps. First, you need to open the context menu of the cell with Web Query data in it. There, you need to find and click on the “Data Range Properties” button. This should lead you to the “External Data Range Properties” menu, there you need to find the “Refresh Controls” option. Now, you need to adjust the need for your setting and turn on refresh in the background. For example, you can start refreshing your document every 30 minutes, and Excel will be updating all needed information every half an hour. More than this, you can choose the option for automatically updating the page every time you are opening the file.

Frequently Asked Questions

Please read our Documentation if you have questions that are not listed below.

-

What tools Microsoft Excel have for web scraping?

Microsoft Excel has a special set of instruments called Web Query. This software can help you with scraping data from different websites into Excel files.

-

What proxies are the best for web scraping?

Web scraping can be performed with different sets of tools and programs. Every use case can require a different set of proxies. In scraping with Excel, you can use residential proxies to bypass any site blocks and/or restrictions.

-

What kind of data can you scrape with Excel?

Excel can scrape static HTML tables from any site of your choice. In the setup process, Excel will open the needed site in your browser and you will be able to scroll down through pages to choose the tables for scraping.

Top 5 posts

Facebook, for a long time, maintains its position at the top of all popular websites. No wonder that many schools, workplaces and organizations ban access to Facebook and other media. If you ever wanted to bypass blocks like this, proxy server setup and other methods can be your solution. In this article, we will discuss how to bypass proxy Facebook login and how to start using proxies in different operating systems.